“This is crazy!”, that was my reaction at some point in PyCon Pune. This is one of my first conference where I participated in a lot of things starting from the website to audio/video and of course being the speaker. I saw a lot of aspects of how a conference works and where what can go wrong. I met some amazing people, people who impacted my life , people who I will never forget. I received so much of love and affection that I can never express in words. So before writing anything else I want to thank each and everyone of you , “Thank you!”.

My experience or association started the time when the PyCon Pune was being conceived Sayan asked me if I could volunteer for Droidcon so that I can learn how to handle A/V for PP, and our friends at HasGeek were generous enough to let me do that. The experience at Droidcon was crazy, I met a lot of people and made crazy lot of friends. Basically me and Haseeb were volunteering to learn the A/V stuff and Karthik was patient enough to walk us through the whole complex set up, to be very honest I didn’t get the whole picture till now but I some how able to manage. I learned a thing or two about manning the camera and how much work actually goes to record a conference.

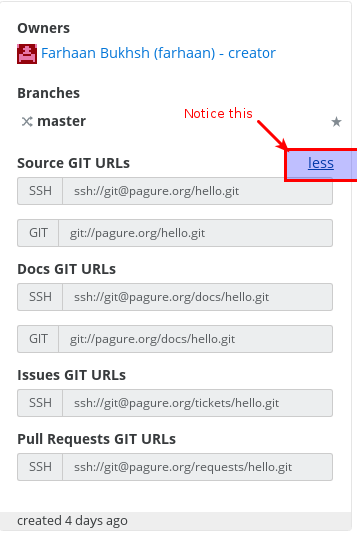

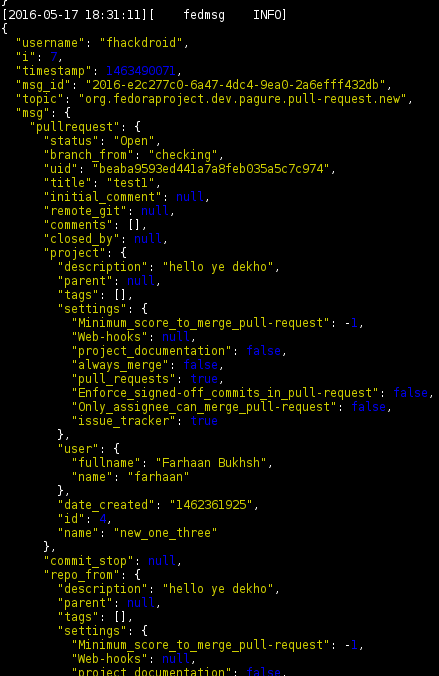

Since I was anyhow going to the conference I thought why not to apply for a talk but somehow I knew I wasn’t going to make it reason being the talks got rejected in a lot of other conferences 😛 . But anyhow being my stubborn self I don’t give up on rejection I gathered all the courage and got Vivek involved and we decided to apply for the talk and to my surprise it got in. This was our first conference talk and it was on one of the projects that we really really love, Pagure.

Since these things happened over a large span of time, by the time conference dates came I have nearly got out of touch with the A/V setup I only have vague idea about what is happening. So Sayan who is a one man army stepped in and he assured me that he will help me with getting the setup ready and we turned again to our friends at HasGeek and they were really humble to help us out this time and also help us with the instruments. We literally had a suitcase full of wires in case things go wrong. We spend around 3 days to up skill ourselves to handle the setup but this time the setup was very simple.

After all this happened and Sayan and Chandan took all the instruments to Pune. I arrived at Pune somewhere around two days before the conference the bus that I took from Bangalore to Pune dropped me somewhere near Telegaun which is near to Mumbai than Pune and I somehow managed to get back to Pune and reached Sayan and Chandan’s house. We were bunking together and there were more people about to come. I took some rest and then we were out , first stop was Reserved Bit , oh I can’t forget this place.

It is a perfect place for geeks and I loved every aspect of it. There I met Siddhesh for the first time we have had conversations over IRC though and met Nisha too. Amazing people the whole experience to travel to Reserved Bit and way back was amazing. We went to the venue to checkout where the camera will be and verify various aspects of the venue. After we came back I started working on the setup and man it was very tough and tricky to gather live feed from the camera.

First of all I was little hesitant to use any proprietary software but then I had no option so we somehow found a windows laptop and tried configuring it but almost everytime either we got a “BLUE SCREEN” or “UPDATES” which annoyed me , the sole reason of using windows was because we had a piece of hardware called capture cards, and the driver for which were not available. After long struggle and a lot of digging done by Siddhesh we got driver for Epiphan capture card for Linux and this was around 12 in the night and we all were still there at Reserved Bit. This gave all of us new hope and then it started we kind of got our minimalistic set up and Siddhesh did a “Compiler talk by Angle Fish” , it was a lot of fun by the time we got it working it was somewhere around 4 in the morning. After all this Sayan and Me actually took a walk back home and picked up Subho on the way. The next day CuriousLearner arrived and then Haseeb , Amit and Gaurav.

We were around 10 people squeezed in a single room but without any discomfort we kind of enjoyed our stay with occasional leg pulling to deep intense tech discussion the whole experience was just terrific. Then comes the actual venue setup that was one crazy thing so the video setup was working with Linux , we had Epiphan capture card working on Kernel version below 4.9 and OBS studio as a recording software. I actually spent a good number of hours to install OBS and downgrading kernel to 4.6 so that Epiphan driver works on at least 6 laptops. When we tried the setup on site and it broke because we didn’t take into account the audio from the mic. All of us were stuck in a state of panic then we realized that we have a mixer with us, but its power cord was left at Reserved Bit . By this time this setup kind of became our conference hack and we wanted it to work so badly. We actually ran back to Reserved Bit spent sometime there since we had some work and then quickly came back to the venue, connected the mixer and after few trial and run it worked.

“YES IT WORKED ” our efforts paid off, we recorded the whole conference using this setup, some of the recordings were a little glitchy and one other hack that we added was we weren’t recording the slides from speaker’s laptop we were doing it manually on our laptops. That means one copy of slide was being played on our laptops and we were recording it accordingly.

Did you know? The talk recordings for #pyconpune are being done on Linux! pic.twitter.com/F0wkb1OiZO

— Siddhesh Poyarekar (@siddhesh_p) February 16, 2017

Apart from this experience I actually got the opportunity to meet all the keynote speaker the first so I met Nick, Honza, Terri, John, Steven and Praveen. This was another experience in itself to know them and talk to the Rockstars of the FOSS WORLD.

As a speaker Kushal introduced me as the Speaker who is also the Cameraman for the event and that was may be the first time in a tech conference. Vivek and I have been collaborating over the talk for a long time and we figured out the order in which we need to speak and we spoke accordingly we kind of covered all the things that we wanted to and got a great response from the audience. I attended most of the talks since I was The A/V GUY but I had a huge help from rtnpro he was always there humble and ready to help.

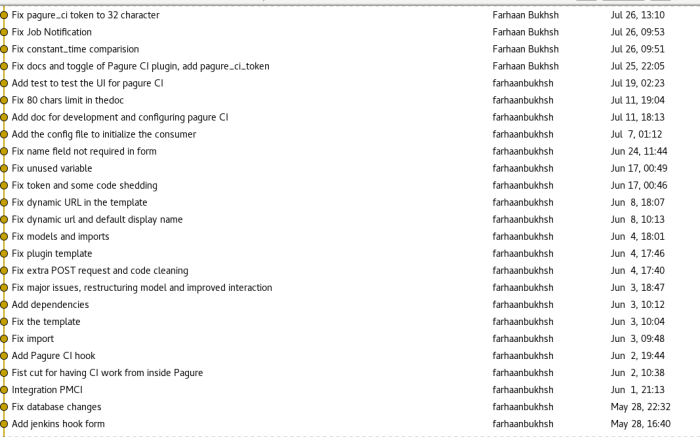

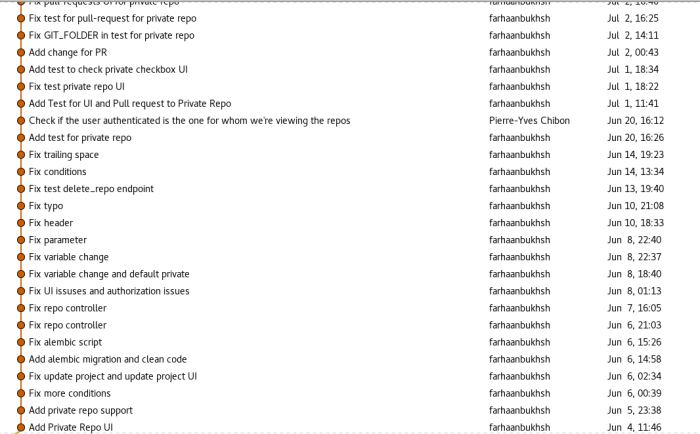

The conference came to an end where Nisha told all the people about the effort that was put in from every person and specially Sayan. After this we had two days of devsprint where we had amazing projects, Vivek and I were mentoring for Pagure and we got a lot of new contributors and quite a number of PRs ( 13 to be precise ), the devsprint was a run away success.

@kushaldas thanks for this 🙂 #flickr https://t.co/394HKYwyyg

— Farhaan Bukhsh (@fhackdroid) March 24, 2017

I also got chance to interact with mbuf and man I saw him smile and crack jokes for the first time and it was crazy fun , I think it was the dinner after the last day of the conference. One of the most amazing experience was to talk to Haris and yes his name is Haris not Harish. The whole experience was so lovely that I don’t think that it can be better than this.

PS: We fixed my Macbook too

@fhackdroid and I just fixed a Macbook Pro at @reservedbitpic.twitter.com/Kn03OOVh11

— Sayan Chowdhury (@yudocaa) February 20, 2017

PPS: Video of our talk at PyCon Pune